Chapter 3 Estimating SLR Parameters

In Example 2.8, we saw how to interpret the values of estimated slopes and intercepts. But how do we obtain estimates of , , and ? Ideally, the line should be as close as possible to the data. But for any real-world dataset, the values will not lie directly in a straight line. So how do we define “close enough”?

3.1 Ordinary Least Squares Estimation of Parameters

The standard way is to choose values for and to minimize the sum of squared vertical distances between the observations and the regression line. We will denote these chosen values as and . Minimizing the distance between and the fitted line is equivalent to minimizing the sum of squared residuals. Confusingly, this is often called the residual sum of squares and it is defined as4 Estimating and by minimizing is called ordinary least squares (OLS) estimation of the SLR parameters. In the rest of this subsection we will explain how to minimize this function mathematically. But in practice this is done by software–so if you just want to know that, skip ahead to Section 3.3.

To find the minimum of the value in (3.1), we can use standard calculus steps for finding a local extrema:

- Find the derivatives of with respect to and .

- Set the derivatives equal to zero.

- Solve for the values of and

This procedures yields the “critical point” of (in “-space”), which we know to be a minimum because is a convex function.

Step 1: Find the two partial derivatives of :

Step 2: Set them equal to zero:

and simplify:

Step 3: Solve for in (3.2):

Plug into (3.3) and solve for :

Equations (3.4) and (3.5) together give us the formulas for computing both regression parameters estimates. and are the (ordinary) least squares estimators of and

3.2 Understanding with correlation

A common alternative to the form of in (3.5) is to define the following sums-of-squares:

The sums-of-squares and represent the total variation in the ’s and ’s, respectively. The value of is the co-variation between the ’s and the ’s and takes the same sign as their correlation. In fact, we can show that the Pearson correlation between and is equal to:

Using these quantities, an equivalent formula for is In this form, we can see that the slope of the regression line depends on the correlation betwee and and the amount variation in each. Importantly, notice that the sign of (i.e., whether it is positive or negative) is the same as the sign of the correlation . Thus, if two variables are positively correlated, their SLR slope will be positive. And if they are negatively correlated, their slope will be negative. We will return to this idea in Section 4.1.

3.3 Fitting SLR models in R

The task of computing and is commonly called “fitting” the model. Although we could explicitly compute the values using equations (3.4) and (3.5), it is much simpler to let R do the computations for us.

3.3.1 OLS estimation in R

The lm() command, which stands for linear model, is used to fit a simple linear regression model in R. (As we will see in Section 8, the same function is used for multiple linear regression, too.) For simple linear regression, there are two key arguments for lm():

- The first argument is an R formula of the form

y ~ x, wherexandyare variable names for the predictor and outcome variables, respectively. - The other key argument is the

data=argument. It should be given adata.frameortibblethat contains thexandyvariables as columns. Although not strictly required, thedataargument is highly recommended. Ifdatais not provided, the values ofxandyare taken from the global environment; this often leads to unintended errors.

Example 3.1 To compute the OLS estimates of and for the penguin data, with flipper length as the predictor and body mass as the outcome, we can use the following code:

Here, we have first loaded the palmerpenguins package so that the penguins data are available in the workspace. In the call to lm(), we have directly supplied the variable names (body_mass_g and flipper_length_mm) in the formula argument, which is unnamed. For the data argument, we have supplied the name of the tibble containing the data. It is good practice to save the output from lm() as an object, which facilitates retrieving additional information from the model.

To see the point estimates, we can simply call the fitted object and it will print the model that was fit and the calculated coefficients:

##

## Call:

## lm(formula = body_mass_g ~ flipper_length_mm, data = penguins)

##

## Coefficients:

## (Intercept) flipper_length_mm

## -5780.83 49.69To see more information about the model, we can use the summary() command:

##

## Call:

## lm(formula = body_mass_g ~ flipper_length_mm, data = penguins)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1058.80 -259.27 -26.88 247.33 1288.69

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -5780.831 305.815 -18.90 <2e-16 ***

## flipper_length_mm 49.686 1.518 32.72 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 394.3 on 340 degrees of freedom

## (2 observations deleted due to missingness)

## Multiple R-squared: 0.759, Adjusted R-squared: 0.7583

## F-statistic: 1071 on 1 and 340 DF, p-value: < 2.2e-16Over the following chapters, we will take a closer look at what these others quantities mean and how they can be used.

Sometimes, we might need to store the model coefficients for later use or pass them as input to another function. Rather than copy them by hand, we can use the coef() command to extract them from the fitted model:

## (Intercept) flipper_length_mm

## -5780.83136 49.68557Although the summary() command provides lots of detail for a linear model, sometimes it is more convenient to have the information presented as a tibble. The tidy() command, from the broom package, produces a tibble with the parameter estimates.

## # A tibble: 2 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) -5781. 306. -18.9 5.59e- 55

## 2 flipper_length_mm 49.7 1.52 32.7 4.37e-107The estimates can then be extracted from the estimate column.

## [1] -5780.83136 49.685573.3.2 Plotting SLR Fit

To plot the SLR fit, we can add the line as a layer to a scatterplot of the data. This can be done using the geom_abline() function, which takes slope= and intercept= arguments inside aes().

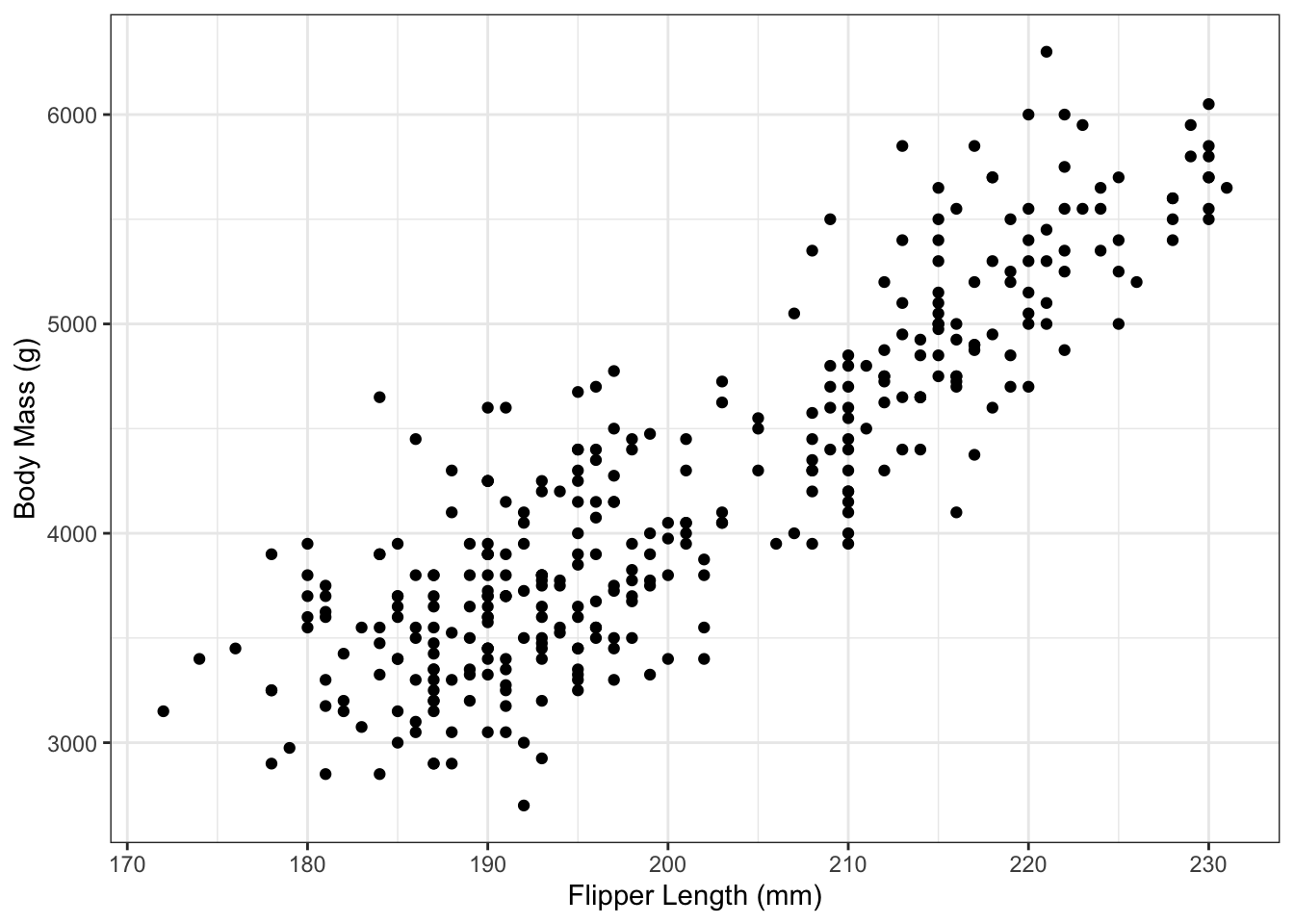

Example 3.2 To plot the simple linear regression line of Example 3.1, we first create the scatterplot of values:

g_penguin <- ggplot() + theme_bw() +

geom_point(aes(x=flipper_length_mm,

y=body_mass_g),

data=penguins) +

xlab("Flipper Length (mm)") +

ylab("Body Mass (g)")

g_penguin

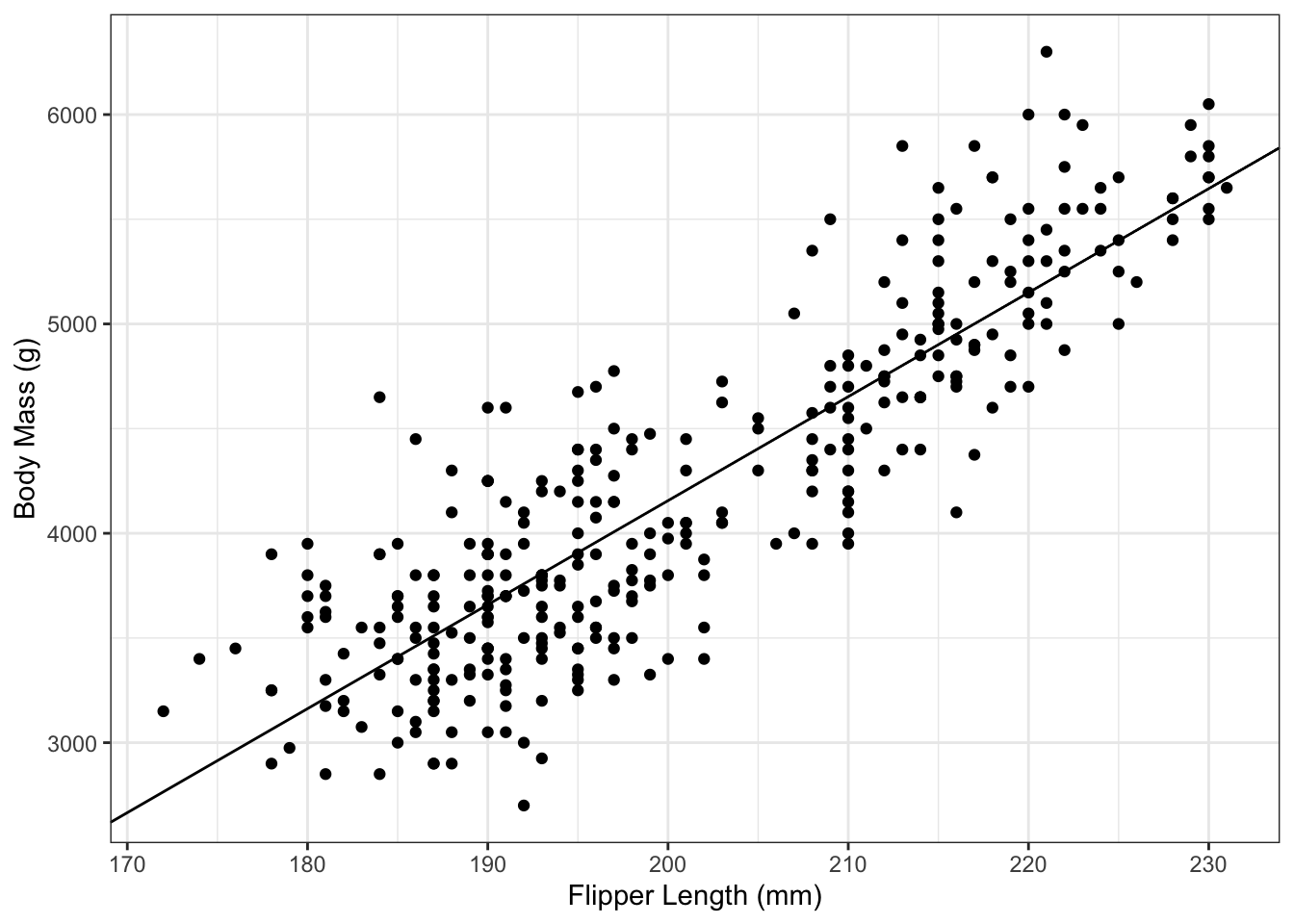

And then can add the regression line to it:

g_penguin_lm <- g_penguin +

geom_abline(aes(slope=coef(penguin_lm)[2],

intercept=coef(penguin_lm)[1]))

g_penguin_lm

It’s important to remember to not type the numbers in directly. Not only does that lead to a loss of precision, it’s also greatly amplifies the chances of a typo. Instead, in the code above, we have used the coef() command to extract the slope and intercept estimates.

An alternative approach, which doesn’t actually require directly fitting the model, is to use the geom_smooth() command. To use this approach, provide that function

- an

xandyvariable inside theaes(), - the dataset via the

dataargument - set

method="lm"(Note the quotes), which tellsgeom_smooth()that you want the linear regression fit - optionally set

se=FALSE, which will hide the shaded uncertainty. We will later see how these are calculated in Section 5.

For example:

g_penguin_lm2 <- g_penguin +

geom_smooth(aes(x=flipper_length_mm,

y=body_mass_g),

data=penguins,

method="lm",

se=FALSE)

g_penguin_lm2

What happens if you forget method="lm"? In that case, the geom_smooth() function uses splines:

This an extremely useful method for visualizing the trend in a dataset. Just note that it is not the regression line.

Example 3.3 Consider the bike sharing data from Section 1.3.4 and Figure 1.9. After fitting an SLR model with temperature as the predictor variable and number of registered users as the outcome, we obtain the line:

We can interpret these coefficients as:

- The estimated average number of registered users active in the bike sharing program on a day with high temperature 0 degrees Celsius is 1,371.

- A difference of one degree Celsius in daily high temperature is associated with an average of 112 more registered users actively renting a bike.

3.3.3 OLS estimation in R with binary x

If the predictor variable is a binary variable such as sex, then we need to create a corresponding 0-1 variable for fitting the model (recall Section 2.3.7). Conveniently, R will automatically create a 0-1 indicator from a binary categorical variable if that variable is included as a predictor in lm().

Example 3.4 (Continuation of Example 2.7.) Compute the OLS estimates of and for a linear regression model with penguin body mass as the outcome and penguin sex as the predictor variable.

We first restrict the dataset to penguins with known sex:

This ensures that our predictor can only take on two values (we will look at predictors with 3+ categories in Section 11).

We then call lm() using this dataset:

## (Intercept) sexmale

## 3862.2727 683.4118Note that R is providing an estimate labeled sexmale. The 0-1 indicator created by R automatically created the variable:

In equation (3.6), female penguins were the reference category and received a value of 0. R chose female as the reference category (instead of male) simply because of alphabetical order.

We can write the following three interpretations of , , and , respectively:

- The estimated average body mass for female penguins is 3,862 grams.

- The estimated average body mass for males penguins is 4,546 grams.

- The estimated difference in average body mass of penguins, comparing males to females, is 683 grams, with males having larger average mass.

3.4 Properties of Least Squares Estimators

3.4.1 Why OLS?

The reason OLS estimators and are so widely used is not only because they can be easily computed from the data, but also because they have good properties. Since and are random variables, they have a corresponding distribution.

We can compute the mean of : This means that is an unbiased estimator of . This is important since it means that, on average, using the OLS estimator will give us the “right” answer. Similarly, we can show that is unbiased:

However, it’s important to note that just because does not mean that . For a single data set, there is no guarantee that will be near . One way to measure how close is going to be to is to compute its variance. The smaller the variance, the more likely that will be close to .

We can compute the variance of explicitly:

It can also be shown that Both and are linear estimators, because they are linear combinations of the data :

The Gauss-Markov Theorem states that the OLS estimator has the smallest variance among linear unbiased estimators. It is the BLUE – Best Linear Unbiased Estimator.

In essence, the Gauss-Markov Theorem tells us that the OLS estimators are best we can do, at least as long as we only consider unbiased estimators. (We’ll see an alternative to this in Chapter 15).

3.5 Estimating

3.5.1 Not Regression: Estimating Sample Variance

Before discussing how to estimate in SLR, it’s helpful to first recall how we estimate the variance from a sample of a single random variable.

Suppose are iid samples from a distribution with mean and variance . The estimated mean is:

and the estimated variance is:

In , why do we divide by ? To understand this, first consider what would happen if we divided by instead: This estimator is biased by a factor of . If we instead divide by , then we obtain an unbiased estimator:

That explains the mathematical reason for dividing by . But what is the conceptual rationale? The answer is degrees of freedom. The full sample contains values. Computing the estimator requires first computing the sample mean . This “uses up” one degree of freedom, meaning that only degrees of freedom are left when computing .

This is perhaps easier to see with an example. Suppose . If and . What is ? It must be that . Knowing determines . In this way, computing puts a constraint on what the possible values of the data can be.

3.5.2 Estimating in SLR

Now, back to SLR. We want to estimate . Because and , it follows that Thus, an estimate of is an estimate of . A natural estimator of is Why divide by here? Since , there are 2 degrees of freedom used up in computing the residuals .

is called the “sum of squared residuals” or “residual sum of squares”

is called “mean squared residuals”

3.5.3 Estimating in R

When summary() is called on an lm object, the sigma slot provides for you:

## [1] 394.2782## [1] 155455.3The value of is also printed in the summary output:

##

## Call:

## lm(formula = body_mass_g ~ flipper_length_mm, data = penguins)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1058.80 -259.27 -26.88 247.33 1288.69

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -5780.831 305.815 -18.90 <2e-16 ***

## flipper_length_mm 49.686 1.518 32.72 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 394.3 on 340 degrees of freedom

## (2 observations deleted due to missingness)

## Multiple R-squared: 0.759, Adjusted R-squared: 0.7583

## F-statistic: 1071 on 1 and 340 DF, p-value: < 2.2e-16It’s also possible to compute this value “by hand” in R:

## [1] 155455.3## [1] 394.27823.6 Exercises

Exercise 3.1 Fit an SLR model to the bill size data among Gentoo penguins, using bill length as the predictor and bill depth as the outcome. Find and using the formulas and then compare to the value calculated by lm().

Exercise 3.2 Using the mpg data (see Section 1.3.5), use lm() to find the estimates of and for a SLR model with city highway miles per gallon as the outcome variable and engine displacement as the predictor variable. Provide a one-sentence interpreation of each estimate.

Exercise 3.3 Compute for the model in Exercise 3.2 using the formulas. Compare to the value calculated from the lm object.

Exercise 3.4 Consider the housing price data introduced in Section 1.3.3, with housing price as the outcome and median income as the predictor variable. Find the OLS estimates for a simple linear regression analysis of this data and provide a 1-sentence interpretation for each.

In most cases, we suppress the dependence on and and just write . But the dependence is included here to make the optimization explicit.↩︎